Docker Swarm mode is a container’s orchestration and clustering tool for managing Docker host. Docker Swarm mode is the native part of Docker engine. It means you don’t have to install anything except Docker engine because Docker swarm mode is part of Docker engine.

Docker swarm mode is introduced in the Docker 1.12. Some of the key benefits of Docker swarm mode are container self-healing, load balancing, container scale up and scale down, service discovery and rolling updates. In last couple of articles we have discussed the followings topics

In this article we will walk through how to install and Configure Docker Swarm Mode on CentOS 7.x / RHEL 7.x For the demonstration i will be using 3 CentOS 7.x or RHEL 7.x Servers on which I will install docker engine. Out of which two servers will act as Docker Engine or Worker node and one will act as a manager. In my case I am using followings:

- dkmanager.example.com (172.168.10.70 ) – It will act as manager who will manage Docker engine or hosts or worker node and it will work as Docker engine as well.

- workernode1.example.com ( 172.168.10.80 ) – it will acts Docker engine or Worker Node

- workernode2.example.com ( 172.168.10.90 ) – it will acts Docker engine or Worker Node

Update the following lines in /etc/hosts file on all the servers

172.168.10.70 dkmanager.example.com dkmanager 172.168.10.80 workernode1.example.com workernode1 172.168.10.90 workernode2.example.com workernode2

Step:1 Install Docker Engine on all the hosts

First set the docker repository and then run beneath command on all the hosts.

[root@dkmanager ~]# yum install yum-utils –y [root@dkmanager ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo [root@dkmanager ~]# yum install docker-ce docker-ce-cli containerd.io –y [root@dkmanager ~]# systemctl start docker [root@dkmanager ~]# systemctl enable docker

Repeat above steps for workernode1 and workernode2

Note: At the time of writing this article Docker Version 1.13 was available.

Step:2 Open Firewall Ports on Manager and Worker Nodes

Open the following ports in the OS firewall on Docker manager using below commands

[root@dkmanager ~]# firewall-cmd --permanent --add-port=2376/tcp success [root@dkmanager ~]# firewall-cmd --permanent --add-port=2377/tcp success [root@dkmanager ~]# firewall-cmd --permanent --add-port=7946/tcp success [root@dkmanager ~]# firewall-cmd --permanent --add-port=7946/udp success [root@dkmanager ~]# firewall-cmd --permanent --add-port=4789/udp success [root@workernode2 ~]# firewall-cmd --permanent --add-port=80/tcp success [root@dkmanager ~]# firewall-cmd --reload success [root@dkmanager ~]#

Restart the docker service on docker manager

[root@dkmanager ~]# systemctl restart docker

Open the following ports on each worker node and restart the docker service

~]# firewall-cmd --permanent --add-port=2376/tcp ~]# firewall-cmd --permanent --add-port=7946/tcp ~]# firewall-cmd --permanent --add-port=7946/udp ~]# firewall-cmd --permanent --add-port=4789/udp ~]# firewall-cmd --permanent --add-port=80/tcp ~]# firewall-cmd --reload ~]# systemctl restart docker

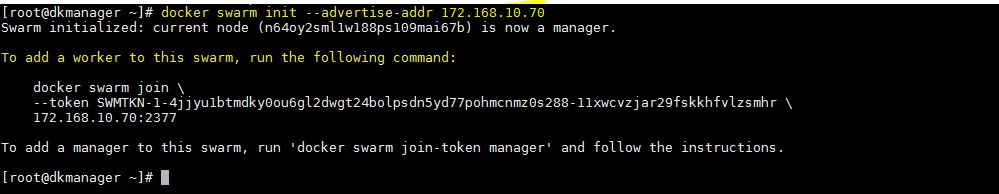

Step:3 Initialize the swarm or cluster using ‘docker swarm init’ command

Run the below command from the manager node(dkmanager) to initialize the cluster.

[root@dkmanager ~]# docker swarm init --advertise-addr 172.168.10.70

This command will make our node as a manager node and we are also advertising ip address of manager in above command so that slave or worker node can join the cluster.

Run the below command to verify the manager status and to view list of nodes in your cluster

[root@dkmanager ~]# docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS n64oy2sml1w188ps109mai67b * dkmanager.example.com Ready Active Leader [root@dkmanager ~]#

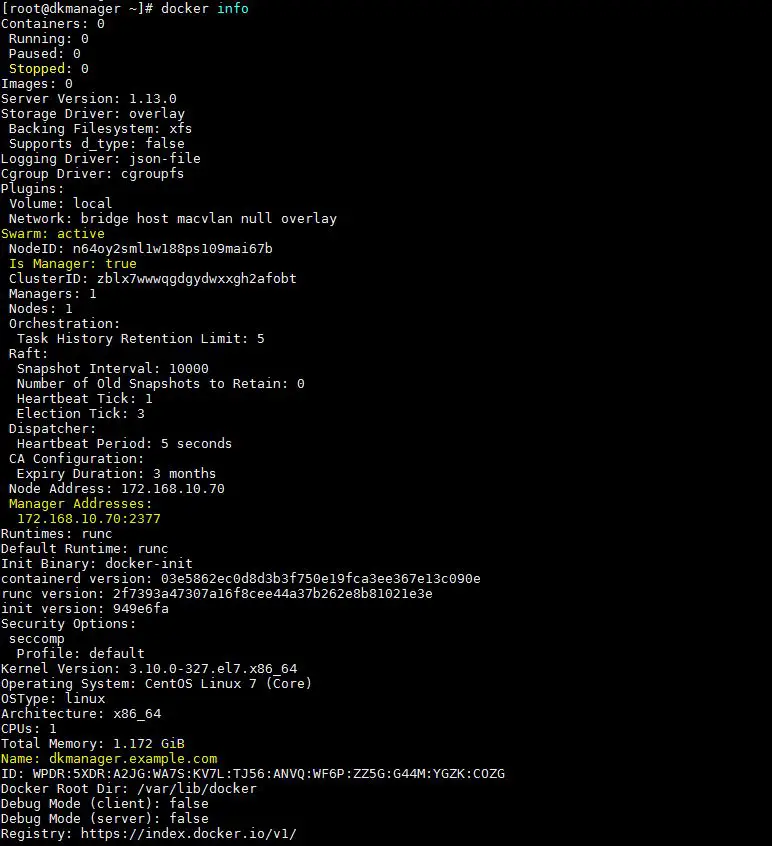

We can also use the “docker info” command to verify the status of swarm

Step:3 Add Worker Nodes to swarm or cluster

To add Worker nodes to the swarm or cluster run the command that we get when we initialize the swarm. Sample command is shown in step3

[root@workernode1 ~]# docker swarm join --token SWMTKN-1-4jjyu1btmdky0ou6gl2dwgt24bolpsdn5yd77pohmcnmz0s288-11xwcvzjar29fskkhfvlzsmhr 172.168.10.70:2377 This node joined a swarm as a worker. [root@workernode1 ~]# [root@workernode2 ~]# docker swarm join --token SWMTKN-1-4jjyu1btmdky0ou6gl2dwgt24bolpsdn5yd77pohmcnmz0s288-11xwcvzjar29fskkhfvlzsmhr 172.168.10.70:2377 This node joined a swarm as a worker. [root@workernode2 ~]#

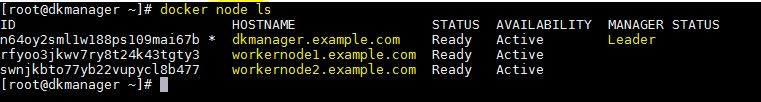

Verify the node status using command “docker node ls” from docker manager

At this point of time our docker swarm mode or cluster is up and running with two worker nodes. In the next step we will see how to define a service.

Step:4 Launching service in Docker Swarm mode

In Docker swarm mode containers are replaced with word tasks and tasks (or containers) are launched and deployed as service and Let’s assume I want to create a service with the name “webserver” with five containers and want to make sure desired state of containers inside the service is five.

Run below commands from Docker Manager only.

[root@dkmanager ~]# docker service create -p 80:80 --name webserver --replicas 5 httpd 7hqezhyak8jbt8idkkke8wizi [root@dkmanager ~]#

Above command will create a service with name “webserver”, in which desired state of containers or task is 5 and containers will be launched from docker image “httpd“. Containers will be deployed over the cluster nodes i.e dkmanager, workernode1 and workernode2

List the Docker service with below command

[root@dkmanager ~]# docker service ls ID NAME MODE REPLICAS IMAGE 7hqezhyak8jb webserver replicated 5/5 httpd:latest [root@dkmanager ~]#

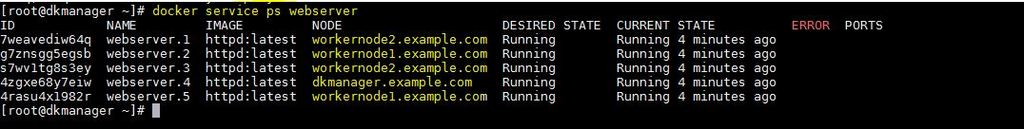

Execute the below command to view status of your service “webserver”

[root@dkmanager ~]# docker service ps webserver

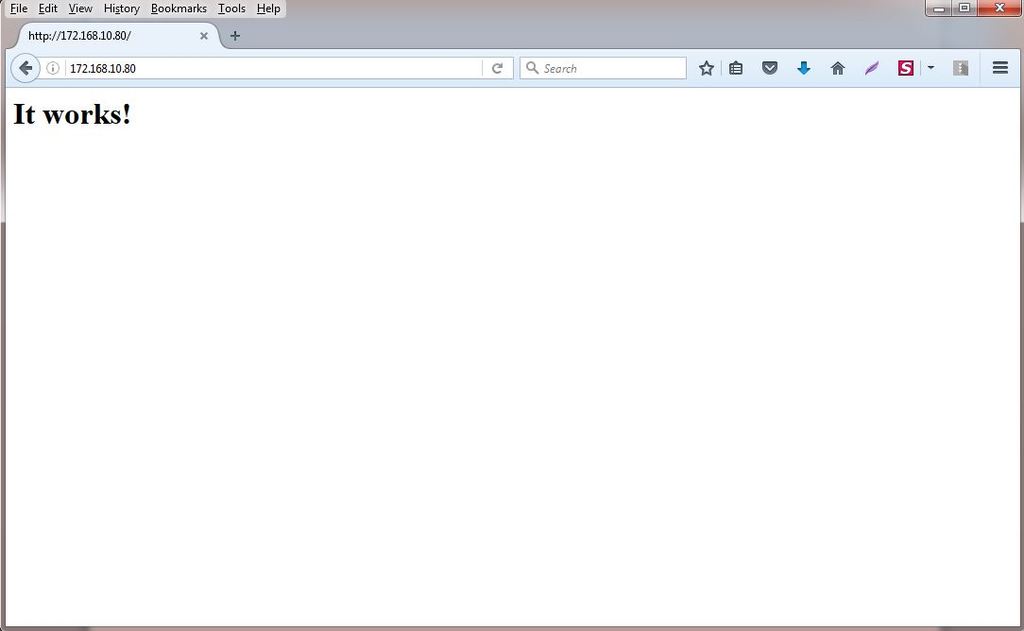

As per above output we can see containers are deployed across the cluster nodes including manager node. Now we can access web page from any of worker node and Docker Manager using the following URLs :

http:// 172.168.10.70 or http://172.168.10.80 or http://172.168.10.90

Step:5 Now Test Container Self Healing

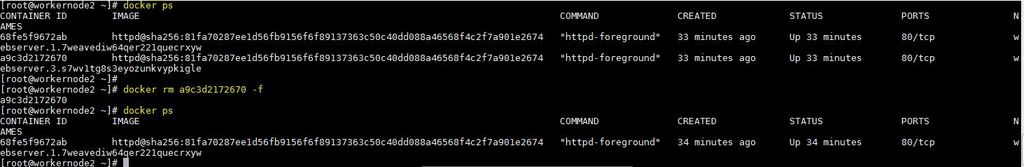

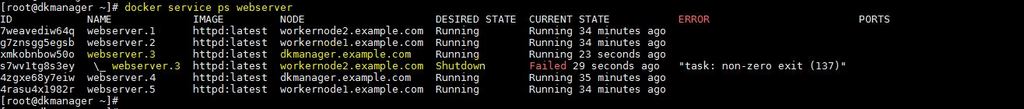

Container self healing is the important feature of docker swarm mode. As the name suggest if anything goes wrong with container , manager will make sure at least 5 container must be running for the service “webserver”. Let’s remove the container from workernode2 and see whether a new container is launched or not.

[root@workernode2 ~]# docker ps [root@workernode2 ~]# docker rm a9c3d2172670 -f

Now verify the Service from docker manager and see whether a new container is launched or not

[root@dkmanager ~]# docker service ps webserver

As per above output we can see a new container is launched on dkmanager node because one of the container on workernode2 is removed

Step:6 Scale up and Scale down containers associated to a Service

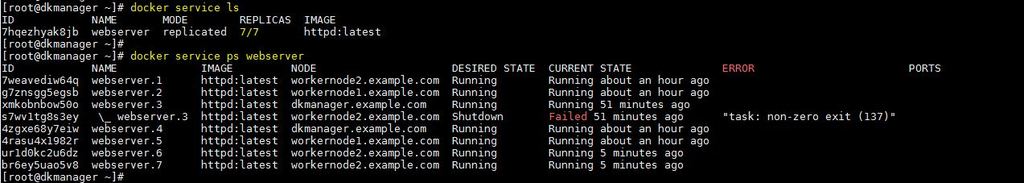

In Docker swarm mode we can scale up and scale down containers or tasks. Let’s scale up the containers to 7 for the service ‘webserver‘

[root@dkmanager ~]# docker service scale webserver=7 webserver scaled to 7 [root@dkmanager ~]#

Verify the Service status again with following commands

Let’s Scale down container to 4 for the service webserver

[root@dkmanager ~]# docker service scale webserver=4 webserver scaled to 4 [root@dkmanager ~]#

Verify the Service again with beneath commands

That’s all for this article. Hope you got an idea how to install and configure docker swarm mode on CentOS 7.x and RHEL 7.x. Please don’t hesitate to share your feedback and comments 🙂

Your tutorial here is brilliant and simple enough to install and use Swarm. Thanks!

Hi Pradeep,

I am a bit confused by what you said “3 CentOS servers …”. Did you mean to have three separate hosts, each of them have unique IP addresses?

Regards,

Yes, Rocco, I have used three CentOS 7 VMs / Servers which have unique IP address.

Brilliant explanation,

Keep up the good work.

Thanks, You explain with example which add value to your work.

simply awesome, explained clearly. I have replicated the same setup without any issues… Kudos !!!!