It is recommended to place pod’s data into some persistent volume so that data will be available even after pod termination. In Kubernetes (k8s), NFS based persistent volumes can be used inside the pods. In this article we will learn how to configure persistent volume and persistent volume claim and then we will discuss, how we can use the persistent volume via its claim name in k8s pods.

I am assuming we have a functional k8s cluster and NFS Server. Following are details for lab setup,

- NFS Server IP = 192.168.1.40

- NFS Share = /opt/k8s-pods/data

- K8s Cluster = One master and two worker Nodes

Note: Make sure NFS server is reachable from worker nodes and try to mount nfs share on each worker once for testing.

Create a Index.html file inside the nfs share because we will be mounting this share in nginx pod later in article.

[kadmin@k8s-master ~]$ echo "Hello, NFS Storage NGINX" > /opt/k8s-pods/data/index.html

Configure NFS based PV (Persistent Volume)

To create an NFS based persistent volume in K8s, create the yaml file on master node with the following contents,

[kadmin@k8s-master ~]$ vim nfs-pv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: nfs-pv spec: capacity: storage: 10Gi volumeMode: Filesystem accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Recycle storageClassName: nfs mountOptions: - hard - nfsvers=4.1 nfs: path: /opt/k8s-pods/data server: 192.168.1.40

Save and exit the file

Now create persistent volume using above created yaml file, run

[kadmin@k8s-master ~]$ kubectl create -f nfs-pv.yaml persistentvolume/nfs-pv created [kadmin@k8s-master ~]$

Run following kubectl command to verify the status of persistent volume

[kadmin@k8s-master ~]$ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfs-pv 10Gi RWX Recycle Available nfs 20s [kadmin@k8s-master ~]$

Above output confirms that PV has been created successfully and it is available.

Configure Persistent Volume Claim

To mount persistent volume inside a pod, we have to specify its persistent volume claim. So,let’s create persistent volume claim using the following yaml file

[kadmin@k8s-master ~]$ vi nfs-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nfs-pvc spec: storageClassName: nfs accessModes: - ReadWriteMany resources: requests: storage: 10Gi

Save and exit file.

Run the beneath kubectl command to create pvc using above yaml file,

[kadmin@k8s-master ~]$ kubectl create -f nfs-pvc.yaml persistentvolumeclaim/nfs-pvc created [kadmin@k8s-master ~]$

After executing above, control plane will look for persistent volume which satisfy the claim requirement with same storage class name and then it will bind the claim to persistent volume, example is shown below:

[kadmin@k8s-master ~]$ kubectl get pvc nfs-pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfs-pvc Bound nfs-pv 10Gi RWX nfs 3m54s [kadmin@k8s-master ~]$ [kadmin@k8s-master ~]$ kubectl get pv nfs-pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfs-pv 10Gi RWX Recycle Bound default/nfs-pvc nfs 18m [kadmin@k8s-master ~]$

Above output confirms that claim (nfs-pvc) is bound with persistent volume (nfs-pv).

Now we are ready to use nfs based persistent volume nside the pods.

Use NFS based Persistent Volume inside a Pod

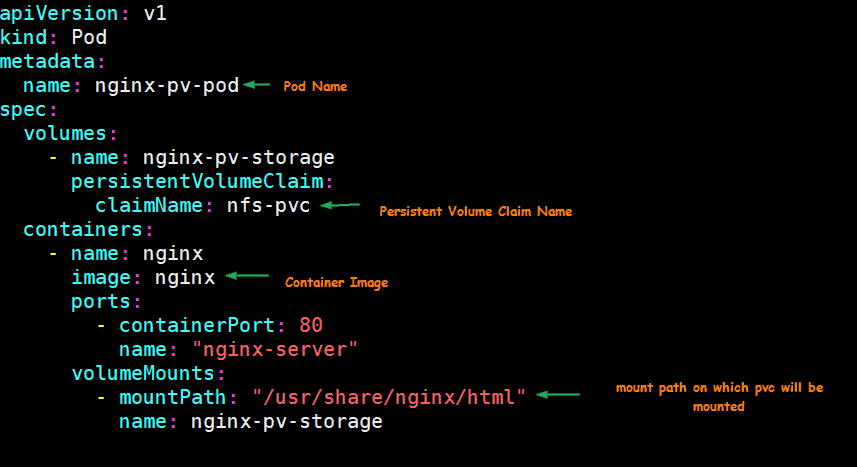

Create a nginx pod using beneath yaml file, it will mount persistent volume claim on ‘/usr/share/nginx/html’

[kadmin@k8s-master ~]$ vi nfs-pv-pod apiVersion: v1 kind: Pod metadata: name: nginx-pv-pod spec: volumes: - name: nginx-pv-storage persistentVolumeClaim: claimName: nfs-pvc containers: - name: nginx image: nginx ports: - containerPort: 80 name: "nginx-server" volumeMounts: - mountPath: "/usr/share/nginx/html" name: nginx-pv-storage

Save and close the file.

Now create the pod using above yaml file, run

[kadmin@k8s-master ~]$ kubectl create -f nfs-pv-pod.yaml pod/nginx-pv-pod created [kadmin@k8s-master ~]$ [kadmin@k8s-master ~]$ kubectl get pod nginx-pv-pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-pv-pod 1/1 Running 0 66s 172.16.140.28 k8s-worker-2 <none> <none> [kadmin@k8s-master ~]$

Note: To get more details about pod, kubectl describe pod <pod-name>

Above commands output confirm that pod has been created successfully. Now try to access nginx page using curl command

[kadmin@k8s-master ~]$ curl http://172.16.140.28 Hello, NFS Storage NGINX [kadmin@k8s-master ~]$

Perfect, above curl command’s output confirms that persistent volume is mounted correctly inside pod as we are getting the contents of index.html file which is present on NFS share.

This concludes the article, I believe you guys got some basic idea on how to configure and use NFS based persistent volume inside Kubernetes pods.

Also Read : How to Setup Kubernetes(k8s) Cluster in HA with Kubeadm

Also Read : How to Install and Use Helm in Kubernetes

Facing the following error:

Warning FailedMount 4m5s (x45 over 137m) kubelet, ip-172-168-10-227.us-east-2.compute.internal Unable to attach or mount volumes: unmounted volumes=[nfs-pv2], unattached volumes=[nfs-pv2 default-token-ln9bq]: timed out waiting for the condition

Could you please guide?

Hi Krishan,

It seems like NFS server is not reachable from your worker nodes. Please make sure connectivity is there among the NFS server and K8s nodes.

For testing you can try to mount the nfs share manually on worker nodes using mount.

can we use those nfs-pvc into deployments, please explain if its possible

Hi Pradeep,

I am currently trying to follow the tutorial, however when I attempt to create the pod it gives me the following error:

Warning FailedMount 73s (x9 over 3m21s) kubelet MountVolume.SetUp failed for volume “nfs-pv” : mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t nfs -o hard,nfsvers=4.1 10.230.87.167:/opt/k8s-pods/data /var/lib/kubelet/pods/833f9b8d-fa7b-4666-bb6c-c9cf5796f579/volumes/kubernetes.io~nfs/nfs-pv

Output: mount.nfs: access denied by server while mounting 10.230.87.167:/opt/k8s-pods/data

I have set up the client and server up and am able to transfer files between them using nfs. I have setup the nfs on one of my worker nodes, but my master and other worker node can access it. Is it required for the nfs share to be located on the master and not a worker node?

Hi,

It is not mandatory to configure NFS on master node. You can even configure your NFS server outside of K8s cluster nodes. But make sure you have allowed the subnets of your master and worker nodes in NFS configurations. Allow NFS ports in OS firewall and create SELinux rule if applicable.

After running container returned default index page because the mountpoint and default web page location didn’t match.

You can inspect the pod itself with ‘kubectl exec -it nginx-pv-pod — bash’ and cd to /usr/share/nginx/html’ to find the mounter index.html file.

THANK YOU! So easy to follow and to understand 😉